... sometimes in Q2/2019, I purchased a Google Coral Dev Board, a USB Accelerator, a Camera, and an Environmental Sensor Board (see product list here). When I received the package, unfortunatelly I haven't had too much time to work with it, so started to read some articles instead:

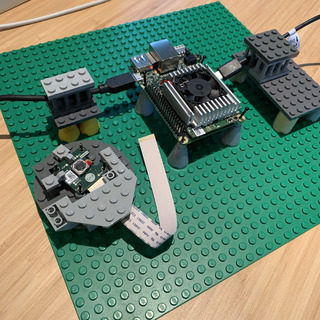

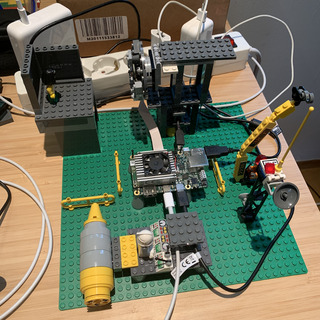

Later on to experiment with the TPU and object detection, as I'm a huge fan of LEGO, I decided to create a LEGO figure detector. Built some structures to host the board and the camera in a LEGO landscape:

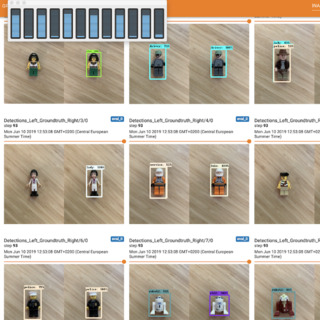

I followed the retrain an object detection model tutorial, but instead of training it for detecting pets, decided to retrain it for detectin LEGO figures, and instead of using MobileNet SSD v1, I decided to use MobileNet SSD v2. To start a trianing, a labeled imageset is required, so I took approximatelly 400 pictures of LEGO figures with my mobile and the Coral Boards camera. iPhone produced HEIC images those had to be converted to JPG:

# brew install imagemagick # magick mogrify -monitor -format jpg *.HEIC(install imagemagick, bulk convert multiple images)

Thereafter I used LabelImg for labeling, and started a docker image based training on my Mac. It turned out using a docker image localy, without a GPU is not a good choice. So decided to move the training to a powerful machine with multiple GPUs.

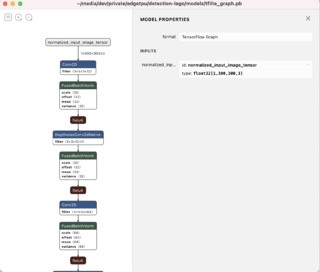

When model training finished, since I was not exactly following the tutorial provided on the Coral page, I had to check out convert_checkpoint_to_edgetpu_tflite.sh for next steps:

python object_detection/export_tflite_ssd_graph.py --pipeline_config_path=ckpt/pipeline.config --trained_checkpoint_prefix=train/model.ckpt-400 --output_directory=models/ --add_postprocessing_op=true

tflite_graph.pb

tflite_graph.pb

... and that tflite compatible frozen graph can be converted to TFLite, including quantizing:

#!/bin/sh tflite_convert --output_file=out2.tflite --graph_def_file=tflite_graph.pb --inference_type=QUANTIZED_UINT8 --input_arrays=normalized_input_image_tensor --output_arrays=TFLite_Detection_PostProcess,TFLite_Detection_PostProcess:1,TFLite_Detection_PostProcess:2,TFLite_Detection_PostProcess:3 --mean_values=128 --std_dev_values=128 --input_shapes=1,300,300,3 --change_concat_input_ranges=false --allow_nudging_weights_to_use_fast_gemm_kernel=true --allow_custom_ops --default_ranges_min=0 --default_ranges_max=255

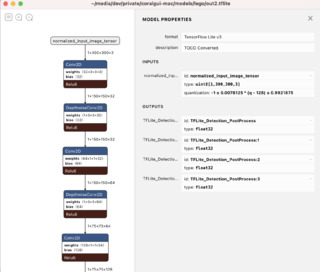

tflite quantized model

tflite quantized model

To run a tflite model on the TPU, it must be compiled for the edge TPU with edgetpu_compiler. Earlier this was possible to do on the Coral Board, but today I would do it on my mac with using docker-edgetpu-compiler

Edge TPU Compiler version 2.0.258810407 Input: out2.tflite Output: out2_edgetpu.tflite Operator Count Status CUSTOM 1 Operation is working on an unsupported data type CONCATENATION 2 Mapped to Edge TPU CONV_2D 34 Mapped to Edge TPU DEPTHWISE_CONV_2D 13 Mapped to Edge TPU RESHAPE 13 Mapped to Edge TPU LOGISTIC 1 Mapped to Edge TPU)

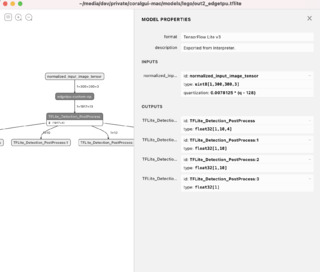

TPU ready model

TPU ready model

To experiment with the model, and display what I wanted, the way I wanted, I modified the Google edgetpu_detect_server code and the edgetpuvision code. Be aware that the 'Edge TPU Python API' is now deprecated.

Since I've built a new version of the Rig with custom 3d printed LEGO parts, started to use an external camera, spent some time figuring out how to improve picture quality, deployed more models, etc. Next part of the Coral story will be about 3d printed LEGO parts

Back to vaew.io